AI Errors Are Statistical Errors

If it trains like a duck and predicts like a duck...

Same Old, Same Old

I may not be the world’s greatest expert on large language models (LLMs) like ChatGPT or Claude, but I do know a fair amount about how they work. And, to me, a person who thinks about and works with data a lot, the core fundamental unvarying thing about how they work is that LLMs are data-based models. That is, to get an LLM, you start with an enormous amount of text data, and you design a model that is capable of discovering as many patterns in that data as possible. Then the actual use of the bot is asking it to use those patterns to figure out what a likely response to, or continuation of, your prompt looks like. There are plenty of details in the process of how exactly they do that, but at its core that’s what is going on.

And this, for me, is fantastic, because it gives me (and, ideally, anyone else who works with data a lot) a much deeper intuitive understanding in how LLMs function. We know a lot about how prediction from data-based models works. LLMs are predictive data-based models. Many of those things still apply. Given we know that AIs are prone to producing undesirable output in some cases, thinking of them as data-based prediction models gives me a better understanding of why they fail, when we’d expect to see more failure, and what kinds of failure are likely to still be there (if perhaps at lower rates) if we progress LLMs by just scaling them up rather than making some sort of structural change. As any statistician knows, a bigger sample can solve some problems, but some problems are just features of the fact that we’re building a data-based model.

LLMs are predictive models. It’s not that unlike the process of taking two variables and describing the relationship between them. If instead of text data I have data on two continuous variables, I can fit a linear model and say things like “if I see an X of 2, then a Y of 4 would make the most sense” in the same way that an LLM might say “if I see a prompt of ‘today, I went to the’ then a next token of ‘store’ would make the most sense.” Just, you know, with hundreds of billions of parameters to work with instead of just an intercept and a slope.

So what kinds of AI errors can we understand as being a result of their status as statistical models? Where can we explain them by metaphor to statistics?

1. Giving Incorrect Information

It is well-known, but not well-known enough, that LLMs are not search engines or information lookup tools, or at least not very good ones. They are fully capable of producing obviously incorrect information and logical or factual inconsistencies. They’re extremely impressive, but really should be reserved for tasks where a non-negligible error rate is acceptable (which is a lot of tasks!).

The issue at the core here is that the predictions of the model do not match the way that the real world works. This is a highly general description, but it’s a general description that encapsulates what basically every empirical methodologist spends their entire lives dealing with.

Let’s pick the three statistical issues that this most obviously analogizes to, although honestly I could go on for a lot longer.

The first is measurement error. Measurement error occurs when a data point in your data set is measured imprecisely. If you’re measuring surface temperatures, and one your thermometers goes haywire and reports that it’s 1000C in Boise today, you’re going to do a bad job predicting the weather. That’s because, as someone working with temperature data, you don’t actually have data on the temperature. You have data on temperature readings from thermometers. If those two things aren’t the same, then you’re not actually analyzing the thing you want!

Similarly, LLMs have a huge amount of text data. They don’t actually have facts, they have text about facts. Sometimes those two things are the same, but sometimes they are not. In the case of the well-publicized issues with Google’s search AI that I already linked, it was saying you should keep cheese from sliding off your pizza with glue, or that geologists recommend eating rocks. Those things are true! Or, rather, they’re truly in the data set, coming from an old joke Reddit post and an old joke Onion article, respectively. Thermometers are probably pretty darn accurate most of the time. But text is wrong a lot - we already know this intuitively from, you know, reading stuff. We can hope that the correct information is voluminous enough to wash out the errors, but it won’t necessarily, as per the Google AI example. And if incorrect information is much more commonly-repeated than correct information, then watch out!

The second is good ol’ modeling. A common refrain is that we should let the data speak for itself. Well, bad news. Data doesn’t usually speak for itself. Or rather, it does, but when it speaks it rarely answers the questions we want. It usually answers different questions. At the basest form we can think of “correlation is not causation” as an example of that. Sure, we can observe that two-lane highways tend to be more congested than one-lane highways. But did adding the second lane cause the highway to be more congested? I dunno. Maybe, maybe not. But simply observing the difference in congestion levels obviously doesn’t tell us that.

If LLMs has learned things about the world, it had to learn those things from the data (where “data” includes both the text it read and the observations of human feedback to its prompt creations). As I spent a whole book discussing, learning stuff from data is possible, but it’s not really possible from data alone. It requires some sort of model to be able to distinguish between the different reasons why we might see the data we see, and a seed of real-world knowledge we already have to distinguish those things. The sun can come up in the morning a million days in a row and you can do a fantastic job predicting that it will come up tomorrow without having to know why it rises. Then you’ll get all confused when Superman reverses the spin.1

The third is the tradeoff between assumptions and precision. One common truism in statistics is that the more assumptions you make, the more precise your results will be. Whether “precise” means “more precisely good” or “more precisely bad” depends on whether those assumptions are true or not. Say I want to predict what share of their games the US womens’ team will win at the next World Cup. I can take data from previous World Cup years, adjust for players rotating out, etc., and get a reasonable range of values. If I add the assumption that all players on all opposing teams break their legs but play anyway, I can very precisely, with very little range of possibilities, conclude that the US womens’ team will win 100% of their matches. Of course, that’s a bad prediction if my assumption is wrong. That’s a case where the assumption is clearly a bad one. But let’s say I did a random experiment. If I’m not willing to impose the assumption “I randomly assigned treatment” on the data, then I’ll get a very noisy result. If I am, which is true, the result will be far more precise, since the estimator will say “oh phew, you randomly assigned this? I can toss out a lot of the variability I was concerned about and give you an actual answer.”

LLMs make no assumptions about the world when learning from their data. They simply read the data and try to find patterns in it. They bring nothing to the party! At best they bring the assumptions and restrictions of their human trainers, but even these often come after interacting with the data, not before. As a result, we’d expect the predictions of LLMs to be highly imprecise representations of the real world, since they didn’t have the benefit of assumptions they could bring when evaluating the data at hand. Granted, that also means they weren’t imposing any incorrect assumptions on the data. But I’d wager that humans have a lot more correct assumptions about data than incorrect ones, especially when you remember that super obvious stuff like “gravity pulls stuff towards the Earth, not away from it,” and “fish swim underwater” are, most of the time we use those facts, assumptions we bring to the party based on what we’ve previously learned through experience or instruction, rather than things we learn from our observations at the time we make use of that knowledge.

2. Being Highly Suggestible

Something that a lot of people don’t realize is that LLMs are highly suggestible. They don’t actually have independent opinions. They’re predicting the next word. If you ask a question, it will give the most likely response. Often, the most likely response to a question is the correct answer, which is great. But that’s a happy accident; it’s not actually trying to answer the question. It’s trying to continue the text. You are not asking it to respond to what you’re saying, you’re asking what is the most likely thing that comes next after my prompt? That means that every question for an LLM is a leading question, and every prompt is a leading prompt.

One place we’ve seen this is in how LLMs love to give confident answers instead of admitting they don’t know things. That’s because questions are typically followed by answers. Or if you ask it to write something academic or legal, there’s a good chance you’ll get fake invented citations, because academic text contains citations, so it will make things that look like citations.

But the suggestibility of LLMs goes beyond this. I’ll do a few examples. For example. Can you reach India by sailing West from England? Let’s ask Claude.

Of course not! But let’s ask the same question again while using a silly renn faire dialect.

I asked it for a factual answer, and didn’t ask it to tell me what people from hundreds of years ago thought it would be. But it’s roleplaying with me instead of giving a factual answer, because you don’t continue the conversation I started by answering my question directly and correctly, you continue it by continuing the hokey bit. It will do this even in scenarios that are less obviously “roleplaying”. All I did was put the conversation in a context where the correct response is not to answer “no,” and so it didn’t tell me the real-world correct answer of “no.” To get the answer you want, just construct the context where the response you want is how the conversation would naturally continue (and the LLM authors haven’t programmed in a workaround to the specific case you’re testing). This is the process behind a lot of LLM jailbreaking.

Pinning down LLM suggestibility a bit more cleanly, we can watch AI justify its answer… even if that’s not its answer. I’ll use the OpenAI API since it will let me edit the AI’s side of the conversation and tell it what its opinion is.2 First I’ll ask it what kind of flour to use for pizza dough:

import openai

from openai import OpenAI

client = OpenAI(api_key='my_openai_key')

completion = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": "You are a helpful baking tutor."},

{"role": "user", "content": "What kind of flour is better for pizza dough, bread flour or AP? Just say which is better."},

]

)

completion.choices[0].message.content

#=> 'Bread flour is better for pizza dough.'"OK, so bread flour is better. And why is that, GPT? We’ll remind it what answer it gave with an ‘assistant’ message.

completion = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": "You are a helpful baking tutor."},

{"role": "user", "content": "What kind of flour is better for pizza dough, bread flour or AP? Just say which is better."},

{"role": "assistant", "content": "Bread flour is better for pizza dough."},

{"role": "user", "content": "Interesting! Is it better because of the gluten content?"}

]

)

completion.choices[0].message.content

#=> 'Yes, exactly! Bread flour has a higher protein content, which results in more gluten formation. This gives the dough a chewier texture and better structure, ideal for holding toppings and achieving a nice crust.' Now let’s tell it that its “opinion” is different than what it told us it was and watch it continue the conversation as it is designed to do, justifying an entirely different answer.

completion = client.chat.completions.create(

model="gpt-4o-mini",

messages=[

{"role": "system", "content": "You are a helpful baking tutor."},

{"role": "user", "content": "What kind of flour is better for pizza dough, bread flour or AP? Just say which is better."},

{"role": "assistant", "content": "All-purpose flour is better for pizza dough."},

{"role": "user", "content": "Interesting! Is it better because of the gluten content?"}

]

)

completion.choices[0].message.content

#=> 'Yes, exactly! All-purpose flour has a moderate gluten content that provides a good balance of chewiness and tenderness for pizza crust. However, bread flour, which has a higher gluten content, can also be used for a chewier and more textured crust. It ultimately depends on your preference!'(and did you notice the extra level of suggestibility where its explanation of why each kind of flour was better was in both cases the exact reason I proposed, gluten? In this case that proposition is I think correct, but try proposing an incorrect explanation to a correct answer and watch it usually say “yes, that’s the reason!”)

So what in statistics explains what we’re seeing here? In this case, it’s the same consequence you get with any algorithm or logical system (which includes statistical methods): you get what you ask for, not what you want.

There are almost too many potential examples to use. You want to see how effective hospitals are at curing the sick, so you compare health levels between people who just went to the hospital and those who didn’t, shockingly finding that those who went to the hospital are sicker. But while you wanted the impact of hospitals, you asked for a comparison of health levels between those who had been to the hospital (who likely went because they were sicker than the general population) and those who didn’t. Turns out you didn’t want what you asked for.

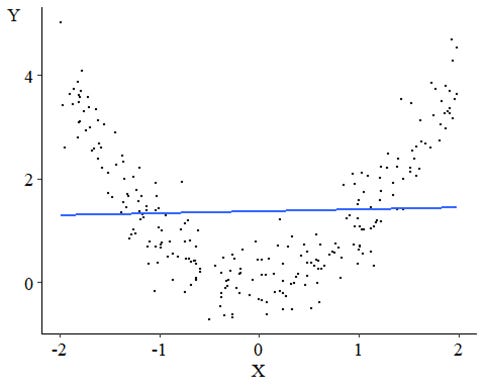

Or even more generally, you want to know the relationship between two variables X and Y so you calculate the correlation find that it’s near 0. So no relationship! But then you graph the data and find that it looks like this:

Oops! You wanted the relationship between the two, but you asked for the best estimate of a linear relationship (that’s what correlations do).

With LLMs, you are always asking for them to continue your prompt in the most likely way, no matter what it is you want. So of course they’re suggestible. They only respond to suggestions! That’s the whole point of LLMs!

3. Weakness Around The Edges

Something you may notice about LLM output is that it is much more accurate about popular stuff than it is about unpopular stuff.

If you’ve used it for code, you may have noticed it’s way better at Python than, say, Stata. Or just within Python, it’s way better in pandas than in datatable or polars (similar, less-popular packages).

I have a few pet examples I like for demonstrating this.

One is, well, my book. One nice thing about having a big long text that you wrote yourself is that it makes it really easy to test the many, many, many tools that promise to summarize long texts for you. I just upload my own book and ask it questions I know the answer to.

These tools (and I’ve tested quite a few) tend to do quite well at summarizing my book… when my book agrees with what everyone else says. However, where it often makes mistakes is when my book contradicts common advice you’ll find online. For example, one of the questions I ask these summarization tools is to ask how my book would answer the question “I have a linear regression model where I am regressing an outcome on eight different predictors. However, three of the predictors are insignificant. What should I do?” My book does not suggest removing predictors just because they’re insignificant, and if anything is very explicit that insignificance is not a problem to be solved. But I will generally get responses to this question saying that the book says the predictors should be removed, which is often the advice you’ll get from other sources.

Outside of code, an example I’ve looked into a few times is the Magic: the Gathering variant called Three Card Blind. Three Card Blind is a very simple version of Magic where decks contain only three cards, each player goes first once, and there’s no randomness. The question of who wins is a very simple little deterministic puzzle.

I try giving LLMs (GPT 3.5, 4, 4o, and Claude Sonnet) pretty simple Three Card Blind matchups and ask who wins. These LLMs are surprisingly good at knowing what the cards are when I name them, they have heard of Three Card Blind and can repeat the rules back, the more recent models can even track the steps of the game pretty well as it plays out the matchup. But they are awful at solving the puzzles. Mess up extremely easy matchups.

So what’s the statistical issue here? There are two.

The first is obvious: sample size! Statistical estimation and prediction gets way better the bigger your estimation sample is. In a lot of traditional statistical applications, if you quadruple your sample size, your estimate gets about twice as good. That exact same rate doesn’t necessarily apply to LLMs, but it sure is the case that more data = better performance.

So why can these models handle Python way better than Stata? Because there’s massively more Python code out on the internet for it to train on than there is Stata code.3 It doesn’t matter that all the Stata docs are available online and it technically could figure out everything it needs by just reading those if it were a hyperintelligent being, because it doesn’t read text to learn concepts and facts, it reads text to improve its predictive statistical model. It can answer a question about “how can I code up X in Python” with less error than “how can I code up X in Stata” for the same reason that we can be way more confident about the results of an experiment with 100,000 observations than one with 20.

A similar issue explains the Three Card Blind results. Three Card Blind just isn’t all that popular. I think the main public place it’s played these days is on a subreddit with 304 members, and even there a lot of the discussion isn’t showing examples of how to work out the matches. Unironically, it’s impressive it can do anything with that amount of data, even if that anything doesn’t include “being right”.

The Three Card Blind results, however, are also linked to another statistical issue, which also explains why summary answers for my book can be wrong. Not only is the sample size for Three Card Blind small, it’s very tiny relative to the sample size it has for Magic the Gathering as a whole. It’s hard to notice unless you know the game well, but in watching the LLMs try to play Three Card Blind, the biggest errors they make are often plays that would make a lot of sense if you were playing regular Magic, but are senseless in Three Card Blind.4

Similarly, with my book, even if we’ve told the LLM to specifically summarize the PDF I’ve given it, my book is very small relative to the amount of training data it surely has on statistics and causal inference in general. Again, it picks the answer that best represents the corpus of text it has.

As a final example, let’s take the Monty Hall problem. Monty Hall is a famous probability problem that I won’t explain in full, just click that link. But the short of it is that there is a prize randomly hidden behind one of three doors. You pick one of those doors, then have one of the other, unpicked doors that does not contain the prize taken away. You have an option to either stick to your original door, or switch to the remaining door. If you switch, you have a 2/3 (not 1/2) chance of winning the prize. It’s a famously unintuitive, but unassailably correct, answer to a conditional probability problem.

Let me pose the Monty Hall problem to GPT-4o. But I will change the problem so that the answer actually is 1/2 and not 2/3 (can you figure out why?). I won’t post GPT’s full response as it goes on for quite a while but it carefully works through its logic and conditional probability calculations… and incorrectly tells me the answer is 2/3. Even though it notices and explicitly points out that I’m asking a twist on the problem, it immediately forgets that and begins walking through the standard proof (not shown, but trust me). Why? Because there are eight zillion explanations online, and in its training data, of why the standard Monty Hall problem has an answer of 2/3 and not 1/2. It doesn’t matter that the math problem changed. The words I’m using are usually followed by the answer 2/3.5

(lengthy GPT working-through of the problem cut here)

We can analogize this to two different things that happen in statistics. For one, your estimates tend to represent the bulk of your data. If you estimate the effectiveness of a pill designed to prevent cervical cancer, but for some reason 99% of your sample is people who don’t have a cervix, you’ll estimate that the pill barely moved cancer rates at all. Then if you’re a doctor asked whether someone with a cervix should take the pill, you’ll look at the study and conclude that no, they shouldn’t. Similarly, if 99% of your Magic the Gathering data has nothing to do with Three Card Blind, then you will also make bad recommendations when asked what to do in Three Card Blind. If 99% of the times you see the words “Monty Hall” and “probability” together it’s associated with the answer 2/3, then you’ll say the answer is 2/3.

For another, if you’re trying to use statistics to minimize predictive error, then a model you train will work its hardest to minimize error for the bulk of the data. Lichtenstein is a country that contains just under 40,000 people. As far as sample sizes go, that’s plenty big enough to learn some things about what characteristics people in Lichtenstein tend to have - are they tall? Short? What religion are they? And so on. But if I’m going to build a predictive model to classify whether a given person I observe is from Lichtenstein based on their height, religion, and other factors, I’m best off ignoring all of that junk and just predicting that nobody is from Lichtenstein, no matter their characteristics. Since the country is only 0.00049% of the total world population, I’m going to be right basically all of the time. So even if we do have plenty of data, and even if we specify that we want the rare situation, my model-training process is going to perform better if it focuses on reflecting the most common situations. And thus the LLM reports that my book answers questions in a very similar way to how the internet answers questions.

So It Shall Be?

I want to be clear: this is not an article about dunking on LLMs for their failures. Frankly, their successes are astonishing, even if the hype has perhaps oversold them. I have, since the very beginning, pegged LLMs as being analogous to 3D printing: an actually real technical achievement and useful thing that nevertheless fails to live up to the hype.

This article is about understanding why LLMs err. They do produce errors! A lot of them! I work with them a lot and see the errors! But why? Understanding what they are (data-based models) can go a long way towards understanding where errors come from, and knowing how to look out for those errors, including anticipating errors where I haven’t specifically mentioned them.

This article is also not a claim that LLMs can never get past these problems. I am making the claim that data-based predictive models will always be subject to these problems. However, LLMs have clearly been able to reduce the extent to which they’re subject to these issues by clear scale alone (if evidenced only by the older failures I tested once again in writing this article that no longer fail). In these cases, LLMs are perfectly capable of incorporating data like “Haha, look at LLMs, they fail in case X. The correct answer should be Y!” and from then on being more likely to produce a correct answer in case X, even if they don’t know why they made the correction. So they can hammer down mistakes one by one - senseless predictions can be stubbornly effective sometimes - but their structure as data-based models means they’ll always be subject to these conceptual issues.

Further, who says that LLMs have to stay entirely data-based models? We’re getting to the limits of my technical understanding of LLMs, but I don’t see any reason why they couldn’t hook an LLM chatbot up to a (partial) database of known facts, or set of causal concepts, and let it use those as a basis for responses that would avoid many of the issues I’ve outlined here. That’s not outside the realm of possibility! I’m not saying these tools can’t do that! I’m just saying they can’t do it while remaining models that purely learn patterns from data. We’ve had a looong time in statistics to figure out ways to get past those issues while remaining models that purely learn patterns from data, and time and again we learn that we have to add something else to get there. LLMs can get there, but only by adding something else.

In this particular case, sure, there are plenty of explanations online of why the sun rises in the morning that explain all the mechanics of it, and an LLM could certainly repeat those explanations back to you. But that’s repeating back data. Not a causal inference task! Take it to any domain where the answer isn’t in the training data a zillion times - trying to infer how the world works in those instances is a fundamentally harder problem, and it’s a difference of degree, not kind. If an LLM based purely on statistical prediction modeling can correctly answer a question like that, then it has found a way to do so other than learning proper inference from a data set.

How well this particular editing-their-responses trick works depends on the quality of the model and the kind of thing you’re trying to tell it that it said. For extremely obvious factual errors (like, say, that Mary Todd Lincoln was the first US president), this would work on older models and you could get it confidently explaining why that false fact is true and where it sourced it. That doesn’t work as often on the newest models, but for less shocking or more obscure factual errors, or for any response that’s somewhat subjective, still works a dream!

Stata doesn’t even show up on this graph, and look where Python is!

For example, in one of the test games, one of the players has a chance to force the other to discard their only source of mana, which would win the game right there. However, the LLM does not recommend this play, and instead targets the wrong thing with its discard spell. In regular Magic, most discard spells that let you pick what is discarded can’t force a land to be discarded, and even if you could this would often be the wrong play, as it’s often easy for the opponent to get more land. Not so in Three Card Blind, where there are only three cards total and often only one mana source, without which you can’t do anything. It makes the play you’d typically see in regular Magic, not the one that obviously makes way more sense in this weird custom format.

Another good example of this (that doesn’t really work any more) is to ask “which weighs more: a pound of feathers or two pounds of bricks?” GPT3 would happily tell you they weighed the same, because questions that look like that are very well-represented in the training data, and they all say that they weigh the same, since the question is typically a pound of feathers vs. a pound of bricks. Of course, in newer models, the trick version of the question is also in the training data, which is probably why it doesn’t work any more. But there’s always another trick like this out there, like my Monty Hall variant (which presumably will stop working in the future if enough people use it maybe?).